Constrained Optimization#

This section covers local constrained optimization. We will be using scipy.optimize module for minimizing an analytical function with constraints. Going through the following documentation will help in understanding the code:

Below block of code imports all the required packages:

import numpy as np

import matplotlib.pyplot as plt

from scipy.optimize import minimize

from scipy.optimize import NonlinearConstraint

Consider the optimization problem described below:

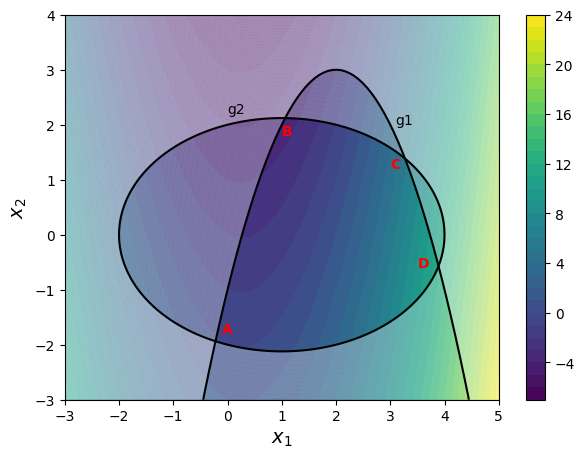

Question: Plot the objective function contour and constraint boundaries.

Answer: Below block of code defines various functions. Read the comments to understand the code.

def f(x):

"""

Function which calculates the function value at given x.

Input:

x - 1D/2D numpy array

"""

# Number of dimensions of input

dim = x.ndim

# To ensure n x 2

if dim == 1:

x = x.reshape(1,-1)

x1 = x[:,0]

x2 = x[:,1]

y = x1**2 - x1/2 - x2 - 2

y = y.reshape(-1,1)

if dim == 1:

y = y.reshape(-1,)

return y

def g1(x):

"""

Function which calculates the g1 value at given x.

Input:

x - 1D/2D numpy array

"""

# Number of dimensions of input

dim = x.ndim

# To ensure n x 2

if dim == 1:

x = x.reshape(1,-1)

x1 = x[:,0]

x2 = x[:,1]

g1 = x1**2 - 4*x1 + x2 + 1

g1 = g1.reshape(-1,1)

if dim == 1:

g1 = g1.reshape(-1,)

return g1

def g2(x):

"""

Function which calculates the g2 value at given x.

Input:

x - 1D/2D numpy array

"""

# Number of dimensions of input

dim = x.ndim

# To ensure n x 2

if dim == 1:

x = x.reshape(1,-1)

x1 = x[:,0]

x2 = x[:,1]

g2 = x1**2 / 2 + x2**2 - x1 - 4

g2 = g2.reshape(-1,1)

if dim == 1:

g2 = g2.reshape(-1,)

return g2

def plot_prob():

"""

Function which plots the function contour and constraints

"""

num_points = 100

# Defining x and y values

x = np.linspace(-3,5,num_points)

y = np.linspace(-3,4,num_points)

# Creating a mesh at which values and

# gradient will be evaluated and plotted

X, Y = np.meshgrid(x, y)

# Evaluating the function values at meshpoints

obj = f(np.hstack((X.reshape(-1,1),Y.reshape(-1,1)))).reshape(num_points,num_points)

const1 = g1(np.hstack((X.reshape(-1,1),Y.reshape(-1,1)))).reshape(num_points,num_points)

const2 = g2(np.hstack((X.reshape(-1,1),Y.reshape(-1,1)))).reshape(num_points,num_points)

# Plotting the filled contours

fig, ax = plt.subplots(figsize=(7,5))

CS = ax.contourf(X, Y, obj, levels=30)

fig.colorbar(CS, orientation='vertical')

# Plotting g1

ax.contour(X, Y, const1, levels=[0], colors="k")

ax.contourf(X, Y, const1, levels=np.linspace(0,const1.max()), colors="white", alpha=0.3, antialiased = True)

ax.annotate('g1', xy =(3.1, 2.0))

# Plotting g2

ax.contour(X, Y, const2, levels=[0], colors="k")

ax.contourf(X, Y, const2, levels=np.linspace(0,const2.max()), colors="white", alpha=0.3, antialiased = True)

ax.annotate('g2', xy =(0.0, 2.2))

ax.set_xlabel("$x_1$", fontsize=14)

ax.set_ylabel("$x_2$", fontsize=14)

# Few annotations

ax.annotate('A', xy =(-0.1, -1.8), c='r', fontweight='bold')

ax.annotate('B', xy =(1.0, 1.8), c='r', fontweight='bold')

ax.annotate('C', xy =(3.0, 1.2), c='r', fontweight='bold')

ax.annotate('D', xy =(3.5, -0.6), c='r', fontweight='bold')

return ax

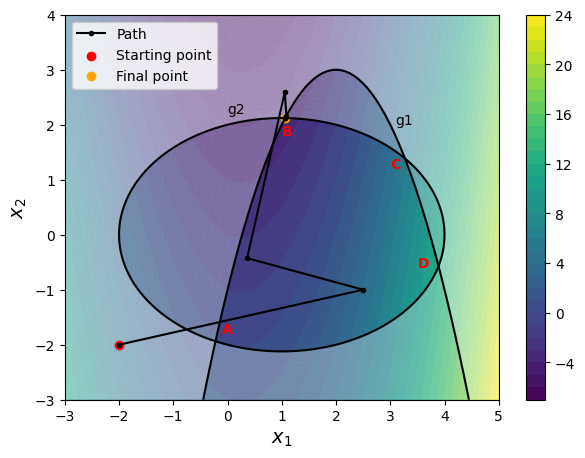

Below block of code makes a contour plot of the objective with constraints using the functions defined in previous block:

plot_prob()

<Axes: xlabel='$x_1$', ylabel='$x_2$'>

The black curves denote \(g_i=0\) and the region ABCDA is feasible, remaining design space (white masked region) is infeasible.

Penalty method#

The goal of penalty methods is to convert a constrained optimization problem into an unconstrained optimization problem by adding a penalty term to the objective function. This penalty term makes objective function larger when the constraints are violated, otherwise penalty is zero. There are many ways to implement penalty function but we will use quadratic penalty function here. Optimization problem statement will be transformed to:

So, the second and third term will be zero when the constraints are satisfied. Otherwise, the penalty term will be positive and will increase the objective function value. Now, the problem is unconstrained and we can use methods like BFGS or Conjugate Gradient to solve the above problem.

Below block of code defines various functions which are used during the optimization. Read comments in the function for more details.

def callback(x):

"""

Function which is called after every iteration of optimization.

It stores the value of x1, x2, and function value.

Input: Current x value

Output: None

"""

history["x1"].append(x[0])

history["x2"].append(x[1])

history["f"].append(f(x))

history["g1"].append(g1(x))

history["g2"].append(g2(x))

def opt_plots(history, method):

"""

Function used for plotting the results of the optimization.

Input:

history - A dict which contains three key-value pairs - x1, x2, and f.

Each of this pair should be a list which contains values of

the respective quantity at each iteration. Look at the usage of this

function in following blocks for better understanding.

method - A str which denotes the method used for optimization.

It is only used in the title of the plots.

"""

# Number of iterations.

# Subtracting 1, since it also contains starting point

num_itr = len(history["x1"]) - 1

# Plotting the convergence history

fig, ax = plt.subplots(figsize=(6,5))

ax.plot(np.arange(num_itr+1), history["x1"], "k", marker=".", label="$x_1$")

ax.plot(np.arange(num_itr+1), history["x2"], "b", marker=".", label="$x_2$")

ax.plot(np.arange(num_itr+1), history["f"], "g", marker=".", label="$f$")

ax.plot(np.arange(num_itr+1), history["g1"], "k--", marker=".", label="$g_1$")

ax.plot(np.arange(num_itr+1), history["g2"], "g--", marker=".", label="$g_2$")

ax.set_xlabel("Iterations", fontsize=14)

ax.set_xlim(left=0, right=num_itr)

ax.set_ylabel("Quantities", fontsize=14)

ax.grid()

ax.legend(fontsize=12)

ax.set_title("Convergence history - " + method, fontsize=14)

# Plotting function contours

ax = plot_prob()

# PLotting other things

ax.plot(history["x1"], history["x2"], "k", marker=".", label="Path")

ax.scatter(history["x1"][0], history["x2"][0], label="Starting point", c="red")

ax.scatter(history["x1"][-1], history["x2"][-1], label="Final point", c="orange")

ax.legend(loc='upper left')

Below block of code defines a function which returns penalized objective function value.

def penalized_obj(x):

"""

Function for evaluating penalized objective.

"""

# Compute objective and constraints

obj = f(x)

const1 = g1(x)

const2 = g2(x)

# Penalty multipliers

p1 = 100

p2 = 100

# Returns quadratic penalized function

return obj + p1 * max(const1,0)**2 / 2 + p2 * max(const2,0)**2 / 2

NOTE: The penalty parameters \(\lambda_1\) and \(\lambda_2\) are kept constant here, but they can be updated during the optimization process to improve the convergence.

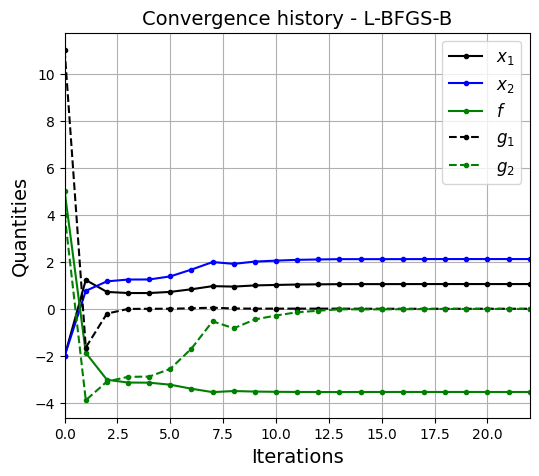

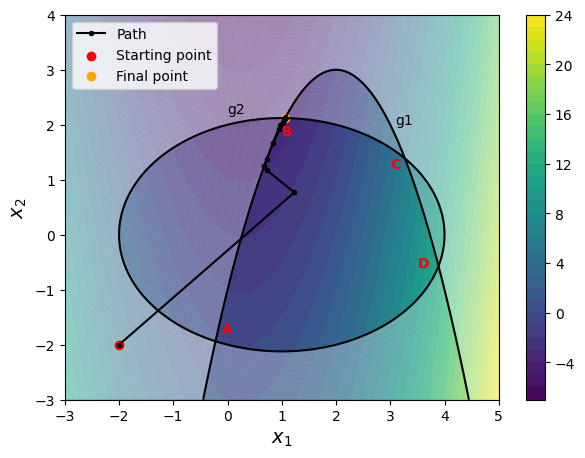

Below block of code defines various parameters for optimization using BFGS method.

# Starting point

x0 = np.array([-2.0, -2.0])

# Solver

method = "L-BFGS-B"

# Defines which finite difference scheme to use. Possible values are:

# "2-point" - forward/backward difference

# "3-point" - central difference

# "cs" - complex step

jac = "3-point"

# Defining dict for storing history of optimization

history = {}

history["x1"] = [x0[0]]

history["x2"] = [x0[1]]

history["f"]= [f(x0)]

history["g1"] = [g1(x0)]

history["g2"] = [g2(x0)]

# Solver options

options ={

"disp": True

}

bounds = [(-3,5), (-3,4)]

# Minimize the function

result = minimize(fun=penalized_obj, x0=x0, method=method, jac=jac,

callback=callback, options=options, bounds=bounds)

# Convergence plots

opt_plots(history, method=method)

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 2 M = 10

At X0 0 variables are exactly at the bounds

At iterate 0 f= 6.85500D+03 |proj g|= 7.00000D+00

At iterate 1 f= -1.86931D+00 |proj g|= 1.96247D+00

At iterate 2 f= -3.01019D+00 |proj g|= 1.00000D+00

At iterate 3 f= -3.12991D+00 |proj g|= 9.50783D-01

At iterate 4 f= -3.13199D+00 |proj g|= 9.84686D-01

At iterate 5 f= -3.21668D+00 |proj g|= 1.48971D+00

At iterate 6 f= -3.35361D+00 |proj g|= 4.16358D+00

At iterate 7 f= -3.39142D+00 |proj g|= 4.40044D+00

At iterate 8 f= -3.47010D+00 |proj g|= 2.76947D+00

At iterate 9 f= -3.50464D+00 |proj g|= 1.09467D+00

At iterate 10 f= -3.51375D+00 |proj g|= 1.12794D+00

At iterate 11 f= -3.52039D+00 |proj g|= 1.04807D+00

At iterate 12 f= -3.52312D+00 |proj g|= 9.74099D-01

At iterate 13 f= -3.52554D+00 |proj g|= 8.84907D-01

At iterate 14 f= -3.52650D+00 |proj g|= 1.19177D-01

At iterate 15 f= -3.52655D+00 |proj g|= 1.23359D-01

At iterate 16 f= -3.52672D+00 |proj g|= 2.15794D-01

At iterate 17 f= -3.52688D+00 |proj g|= 2.59330D-01

At iterate 18 f= -3.52703D+00 |proj g|= 2.70157D-01

At iterate 19 f= -3.52704D+00 |proj g|= 2.63544D-01

At iterate 20 f= -3.52713D+00 |proj g|= 2.75143D-02

At iterate 21 f= -3.52714D+00 |proj g|= 3.10332D-05

At iterate 22 f= -3.52714D+00 |proj g|= 3.67419D-09

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

2 22 100 24 0 0 3.674D-09 -3.527D+00

F = -3.5271354414899267

CONVERGENCE: NORM_OF_PROJECTED_GRADIENT_<=_PGTOL

Bad direction in the line search;

refresh the lbfgs memory and restart the iteration.

By using the penalty method, you can reach the optimum while satisfying the constraints. Optimum point lies at the intersection of both the constraints (which makes both constraints active). It is recommended to change optimization parameters (such as starting point, penalty multiplier, etc.) and see how the solution changes.

NOTE: The optimization method used is

L-BGS-Bwhich is a modified version ofBFGSfor bounded optimization.

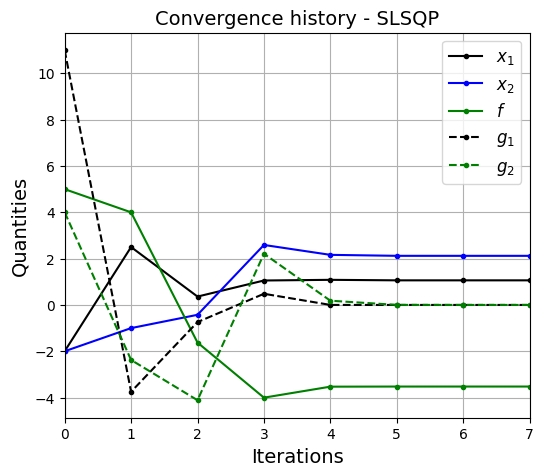

Question: Use SLSQP method for solving the original constrained optimization problem.

Answer: Below block of code defines various parameters for optimization using SLSQP method:

# Starting point

x0 = np.array([-2, -2])

# Solver

method = "SLSQP"

# Defines which finite difference scheme to use. Possible values are:

# "2-point" - forward/backward difference

# "3-point" - central difference

# "cs" - complex step

jac = "3-point"

# Defining dict for storing history of optimization

history = {}

history["x1"] = [x0[0]]

history["x2"] = [x0[1]]

history["f"] = [f(x0)]

history["g1"] = [g1(x0)]

history["g2"] = [g2(x0)]

# Solver options

options ={

"disp": True

}

# Setting bounds

bounds = [(-3,5), (-3,4)]

# Setting constraints - optimizer needs a list of Nonlinear constraints objects

# Read the documentation for more details.

constraints = [NonlinearConstraint(g1, -np.inf, 0),

NonlinearConstraint(g2, -np.inf, 0)]

# Minimize the function

result = minimize(fun=f, x0=x0, method=method, jac=jac,

constraints=constraints, callback=callback,

options=options, bounds=bounds)

# Print value of x

print("Value of x1 at optimum: {}".format(result.x[0]))

print("Value of x2 at optimum: {}".format(result.x[1]))

print("Value of g1 at optimum: {}".format(g1(result.x)))

print("Value of g2 at optimum: {}".format(g2(result.x)))

# Convergence plots

opt_plots(history, method=method)

Optimization terminated successfully (Exit mode 0)

Current function value: -3.523406546437449

Iterations: 7

Function evaluations: 36

Gradient evaluations: 7

Value of x1 at optimum: 1.0623763071639867

Value of x2 at optimum: 2.120861810878845

Value of g1 at optimum: [2.46287879e-10]

Value of g2 at optimum: [2.22692002e-07]

SLSQP reaches the same optimum as penalty method but with much less number of iterations (and function evaluations). It is specifically designed for constrained optimization and uses Quadratic Programming approach. It is recommended to use SQP based methods for constrained optimization.

SLSQP method is very sensitive to the starting point. Try changing the starting point and see how the optimization path changes. For example, try (4,-2) as the starting point and you will see that the optimization process goes to the boundary of contour plot and reverts back towards to optimum since the bounds are provided to optimizer.